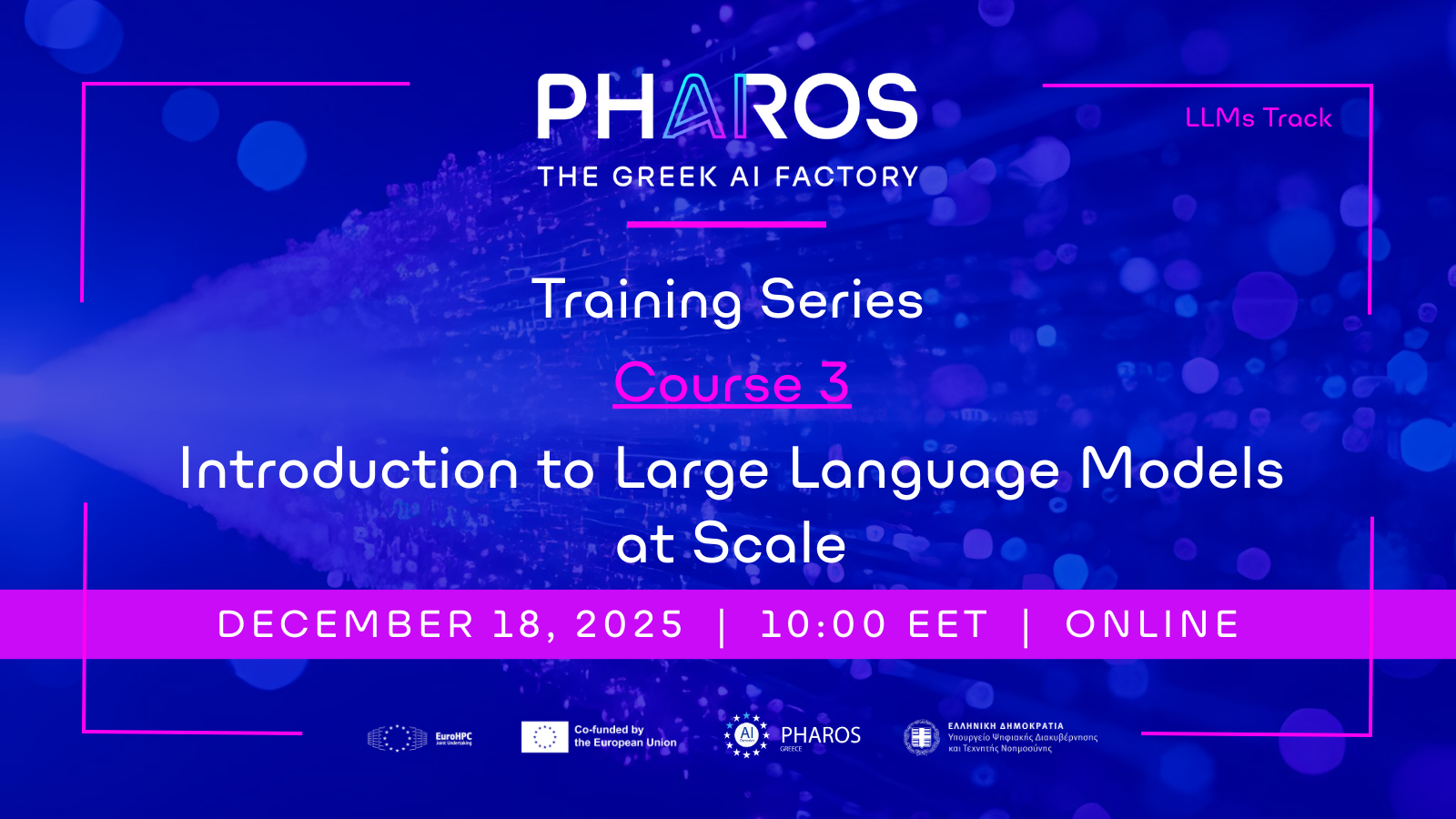

PHAROS AI Factory announces the 3rd Course of its Training Series, under the title "Introduction to Large Language Models at Scale", Track "Large Language Models", held online via Zoom.

Date: December 18th, 2025, at 10:00 EET

Location: Online via Zoom

Presentation Languages: Greek

Target Level: Intermediate

Audience: The primary target audience consists of AI/Machine Learning Engineers, Data Scientists, and HPC Engineers working in industries that leverage supercomputing for large-scale modeling and simulation. This includes professionals in technology, finance, and research sectors seeking to optimize performance and access advanced European infrastructure. It also targets software developers aiming to build and scale next-generation LLMs like those for specialized language domains.

Course Description:

-

Introduction to High Performance Computing: We will analyze the fundamental components of an HPC System, including the roles of CPUs, GPUs, and Nodes in parallel computing environments. Furthermore, the talk will explore how HPC accelerates Artificial Intelligence (AI), specifically through Parallel Stochastic Gradient Descent (PSGD), and conclude with an overview of the Top 500 list and the European supercomputing ecosystem.

-

How to prepare an access proposal for Greek and European HPC Clusters: This talk provides an overview of the Greek and EuroHPC Joint Undertaking (JU) Access Calls and the key elements required for a successful application. We will cover the specific resource needs and evaluation criteria.

-

Introduction to LLMs: We present the basics of LLM fundamentals, focusing on key components that constitute an LLM architecture, including data tokenization, attention mechanisms and other general architectural choices. We then proceed with training details and objectives, including techniques to combat overfitting.

-

Building Greek Large Language Models: Meltemi & Krikri: A dynamic overview of the Greek Large Language Models Meltemi and Krikri, focusing on dataset choices, training and adaptation methods (finetuning), as well as evaluation approaches. This section aims to offer a comprehensive first look at the technological foundations behind Greek LLMs, paving the way for their future advancement and setting the stage for the topics that will be presented in Pharos AI’s upcoming training programs.

-

Fine Tune LLMs on a Single GPU (hands-on): This talk explores the practical aspects of Fine-Tuning Large Language Models (LLMs) utilizing the high-level Hugging Face Trainer API. We detail the process of adapting a Llama 3.2-1B-Instruct model for a specialized task using FuggingFace datasets. The core focus is demonstrating efficient training loop management and analyzing the performance gains between the base and fine-tuned models.

-

Scalability of LLMs: Following the introduction to LLMs lecture, we proceed with LLM inference details, including the several sampling strategies that are used in practice and how LLM learning is ultimately actionable. We conclude by introducing the emergent abilities of LLMs, i.e. human-like abilities that LLMs implicitly acquire at scale, justifying the need for HPC resources for efficient inference.

-

Fine Tune LLMs on a node with multiple GPUs (code presentation): This talk dissects a distributed fine-tuning workflow for LLMs using the Hugging Face Trainer and torch.distributed.run. The demonstration focuses on adapting a Llama 3.2-1B-Instruct model by tokenizing a custom markdown corpus for causal language modeling. Key sections include setting up the multi-GPU environment using a Slurm job script and showcasing the performance difference between the base and fine-tuned models on domain-specific questions.

Learning Objectives

-

Introduce the fundamental concepts of High-Performance Computing (HPC), including its hardware components and role in accelerating AI through Parallel Stochastic Gradient Descent (PSGD).

-

Provide a comprehensive understanding of the application process for accessing Greek and European HPC resources.

-

Explore the technological foundations and development of Greek Large Language Models (LLMs), specifically Meltemi and Krikri.

-

Offer a practical, hands-on experience in fine-tuning LLMs efficiently on single and multiple GPU environments using tools like the Hugging Face Trainer API and torch.distributed.run.

Prerequisites: Basic Python Knowledge (for the hands on part only)

Note: Please enter your institutional/corporate email when registering.

Instructors' profiles

-

Dr. Nikos Bakas is a Senior Data Scientist at GRNET with a broad background in Artificial Intelligence. He has authored numerous publications across AI thematic areas including Machine Learning, Numerical Methods, Optimization, and Large Language Models. He has served as principal investigator, researcher, and coordinator in multiple projects at research centers and universities. Dr. Bakas holds a Ph.D. from the National Technical University of Athens and has long-standing teaching experience. He also brings extensive programming expertise in a wide range of languages and frameworks, and the training seminars he has organized have reached a large community of engineers.

-

Eleni Batsi is a Research Associate at the Institute for Language and Speech Processing (ILSP) of the Athena Research Center. She holds a degree in Linguistics and a Master’s Degree in Language Technology from the National and Kapodistrian University of Athens. Her research focuses on Large Language Models (LLMs), the development and evaluation of AI Agents, as well as training and knowledge-transfer activities in the field of Artificial Intelligence.

-

Maria Lymperaiou is a postdoctoral researcher in the Artificial Intelligence and Learning Systems (AILS) laboratory in National Technical University of Athens. She has a strong background in deep learning, natural language processing and multimodal learning with numerous applications in the field, specializing in Large Language Models (LLMs), vision-language systems, explainable AI models and related applications.

-

Giorgos Filandrianos is a researcher at the AILS Laboratory of NTUA and Postdoctoral Researcher at Instituto de Telecomunicações, Lisbon, working on natural language processing and model explainability. His research includes reasoning, fairness, and the generation of counterfactuals, with contributions to research projects and publications involving LLM-based reasoning, discourse analysis, and interpretability methods aimed at revealing the decision-making processes of AI systems.

-

Panagiota Gyftou is an HPC Users & Application Support Engineer at GRNET, specializing in High-Performance Computing for Machine Learning and Artificial Intelligence applications. She holds a Bachelor's degree in Informatics and Telecommunications from the National and Kapodistrian University of Athens (NKUA) and is currently pursuing a Master's in Informatics in the same department, focusing on Data, Information, and Knowledge Management.